Introduction

Platform Engineering has become the talk of the town and with it the rise of tooling aimed to help create an IDP (Internal Development Platform), it makes sense to take a look a what’s on offer. One such tool is Port. Port has a lot of features including Software Catalog, Automation, RBAC, Self-Service Actions, Scorecards, etc. as well as integrations into tools such as Datadog, Jira, Pager Duty, GitHub, ArgoCD, etc. Port can also import your existing cloud resources from AWS, Azure and GCP.

Our current cloud provider is Azure and the IaC is in Bicep deployed via Azure Pipelines, Port however does not support Bicep (or ARM) as a provider for importing resources from Azure. The question then is could Port be used to create a self-service portal using Bicep and Azure Pipelines?

The answer is yes, in this article we are going to look at creating a small self-service portal using the above mentioned technologies.

Blank Slate

When you first login to Port you are presented with a blank slate and the feeling of not sure what to do next but fortunately there is a Live Demo site which shows how some of the things go together and there is a lot of documentation as well as an active community to help out too.

Port has capability of being configured from code and supports a number of providers, however, for this article we are going to just add blueprints via the UI.

First Blueprint

Let’s first create a Cloud Host blueprint we can use to store information such as locations for use with the cloud provider (in this case Azure).

To add a new blueprint, select the button on the right hand side of the Builder screen.

Then fill in name as Cloud Host and pick an icon.

Once this has been created we can add some properties to associate with the provider.

Let’s start with some locations to use with creating some resources.

At this point there is just a blueprint for the cloud host and in order to be useful we will need to add some details for the Cloud Hosts in the Catalog, as Bicep is not a supported ingestion we’ll have to add this manually.

After adding the Cloud Host details manually the catalog looks like this:

Note: In this demo we are just using the location but other information could be added for each configuration.

Resource Blueprint

Let’s head back to the Builder screen and add a new blueprint for creating an Azure Resource. As previously, add a new blueprint for creating resources.

Once created we can add a relation link to the Cloud Host blueprint.

We can also add properties to the Create Resource Blueprint like with Cloud Host that we might want to see in the catalog e.g. IaC used, Environment, App name, etc.

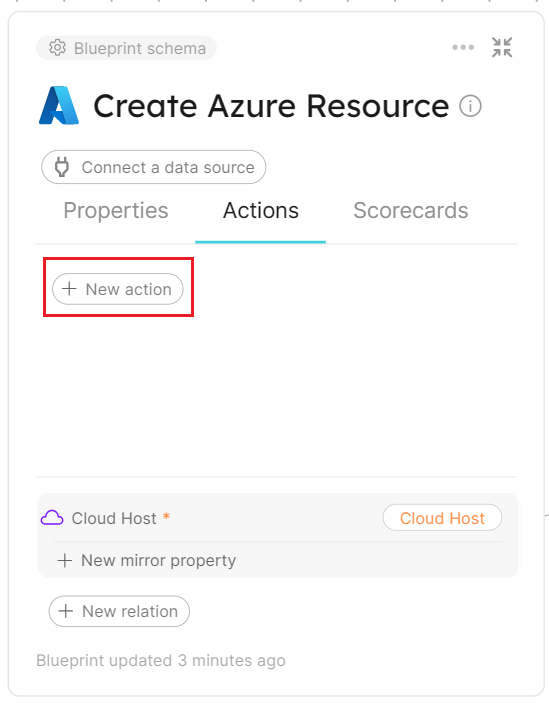

Actions with Azure DevOps

So next up is to add some actions to the blueprint so that actually create some resources.

For our new Action, let’s create a small Azure resource like an Azure Storage Account.

On the next screen we get to define what parameters we want the user to provide when the action is ran, for this example we will add an AppName, Location, Environment, Sku and Access Tier.

Note: Location is going to be linked to the Cloud Host blueprint using the Entity Selection property type.

Note: The identifiers for the form fields must match the expected entries in Bicep e.g. Access Tier would default to access_tier but the Bicep parameter might be accessTier.

The next part is to configure the backend, for this we are going to hook up Azure Pipelines, this page provides a client secret to use to add to Azure DevOps.

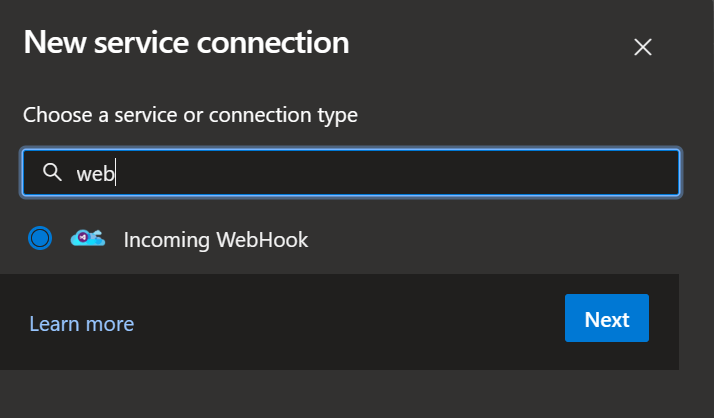

In an Azure DevOps project setup a service connection for Port to connect to using “Incoming Webhook”.

Fill in the details from the Port configuration including the client secret and Http Header.

Once saved, fill in the details back in Port and go the final page which is permissions.

For the purposes of this demo we will leave this as is with the default settings.

Pipeline

Now everything is configured in Port, we need to add a pipeline to Azure DevOps to trigger on a selection from Port. The Backend page in the action setup gives an example of a starting pipeline but additional steps are needed to support creating resources using Bicep and we also didn’t think there needed to be a multiple job configuration for this purpose.

The below pipeline is triggered by the configured webhook, it deploys Azure resources using a Bicep template and communicates with Port.io. Key steps include fetching an access token, creating a Bicep parameters file, deploying resources, and updating Port.io with deployment status and information. The pipeline includes logging steps, outputs display, and interaction with the Port.io API for entity upserts and status updates.

The goal of this pipeline is to be one that can be reused for building different kinds of resources instead of having multiple pipelines for each resource. After multiple runs and attempts, we finally arrived at this configuration.

trigger: none

pr: none

resources:

webhooks:

- webhook: incomingport

connection: Port.io

variables:

subscription: 'MySubscription'

System.debug: true

runId: ${{ parameters.incomingport.context.runId }}

deployParametersFileName: 'deploy.bicepparam'

deployFileName: deploy_${{ lower(replace(parameters.incomingport.action,'create_', '')) }}.bicep

deployStatus: "FAILURE"

deployStatusMessage: "Azure Resource Creation Failed"

deployMessage: "Deployment Pipeline Failed"

stages:

- stage: run_resource_creation

displayName: 'Run Resource Creation'

jobs:

- job: fetch_port_access_token

displayName: 'Create Resources'

pool:

vmImage: 'ubuntu-latest'

steps:

- script: |

accessToken=$(curl -X POST \

-H 'Content-Type: application/json' \

-d '{"clientId": "$(PORT_CLIENT_ID)", "clientSecret": "$(PORT_CLIENT_SECRET)"}' \

-s 'https://api.getport.io/v1/auth/access_token' | jq -r '.accessToken')

echo "##vso[task.setvariable variable=accessToken;issecret=true]$accessToken"

echo "runId=$(runId)"

displayName: Fetch Access Token and Run ID

name: getToken

- template: templates/sendlogs.yml

parameters:

Message: "Create parameters file"

AccessToken: $(accessToken)

RunId: $(runId)

conditionLevel: succeeded()

- pwsh: |

$obj = $env:payload | ConvertFrom-Json -AsHashtable

$additionalObj = $env:entityPayload ?? @() | ConvertFrom-Json -AsHashtable

$excludeList = @()

$filename = "$env:deployParametersFileName"

Out-File -FilePath $filename

"using '$(deployFileName)'" | Out-File -FilePath $filename -Append

"param runId = '$env:runId'" | Out-File -FilePath $filename -Append

# Payload Properties

($obj.Keys | ForEach-Object {

if ($_ -notin $excludeList) {

if($($obj[$_]).GetType().Name -eq "String") {

"param $_ = '$($obj[$_])'"

}

else {

"param $_ = $($obj[$_])"

}

}

}) | Out-File -FilePath $filename -Append

# Entity Payload Properties

if($additionalObj.count -ne 0) {

$entityExcludeList = @("iac","provider","appname")

($additionalObj.Keys | ForEach-Object {

if ($_ -notin $entityExcludeList) {

if($($additionalObj[$_]).GetType().Name -eq "String") {

"param $_ = '$($additionalObj[$_])'"

}

else {

"param $_ = $($additionalObj[$_])"

}

}

}) | Out-File -FilePath $filename -Append

if($env:entityIdentifier -ne $null) {

"param parentName = '$env:entityIdentifier'" | Out-File -FilePath $filename -Append

}

}

displayName: 'Create Parameters File'

env:

runId: $(runId)

payload: ${{ convertToJson(parameters.incomingport.payload.properties) }}

entityPayload: ${{ convertToJson(parameters.incomingport.payload.entity.properties) }}

entityIdentifier: ${{ parameters.incomingport.payload.entity.identifier }}

deployParametersFileName: $(deployParametersFileName)

- bash: |

cat $(deployParametersFileName)

displayName: 'Show File'

condition: and(succeeded(), eq(variables['System.debug'], 'true'))

- template: templates/sendlogs.yml

parameters:

Message: "Deploying Resources"

AccessToken: $(accessToken)

RunId: $(runId)

conditionLevel: succeeded()

- task: AzureCLI@2

displayName: "Deploy Resources"

inputs:

azureSubscription: $(subscription)

scriptType: "pscore"

scriptLocation: "inlineScript"

inlineScript: |

$outputStatus = "SUCCESS"

$outputStatusMessage = "Azure Resource Creation Succeeded"

$resourceGroupName = "$env:environment-$env:appname-rg"

$deploymentName = "deploy_$env:runId"

if($(az group exists --name $resourceGroupName) -eq $false) {

az group create --name $resourceGroupName --location $env:location

}

$output = $(az deployment group create --resource-group $resourceGroupName --template-file $env:deployFileName --parameters $env:deployParametersFileName --name $deploymentName 2>&1)

if (!$?) {

$outputStatus = "FAILURE"

$outputStatusMessage = "Azure Resource Creation Failed"

try {

$obj = $output.Exception.Message -replace '["()]', '\$&'

$output = $obj

} catch {

$output = "Something went wrong"

}

} else {

$output = $output -replace '["()]', '\$&'

}

$title = (Get-Culture).TextInfo.ToTitleCase($env:deployTitle)

$resourceName = $(az deployment group show -g $resourceGroupName -n $deploymentName --query properties.outputs.resourceName.value -o tsv)

Write-Host "##vso[task.setvariable variable=resourceName;]$resourceName"

Write-Host "##vso[task.setvariable variable=deployMessage;]$output"

Write-Host "##vso[task.setvariable variable=deployStatus;]$outputStatus"

Write-Host "##vso[task.setvariable variable=deployStatusMessage;]$outputStatusMessage"

Write-Host "##vso[task.setvariable variable=deployTitle;]$title"

env:

runId: $(runId)

location: ${{ parameters.incomingport.payload.properties.location }}

environment: ${{ coalesce(parameters.incomingport.payload.properties.environment, parameters.incomingport.payload.entity.properties.environment) }}

appname: ${{ coalesce(parameters.incomingport.payload.properties.appname, parameters.incomingport.payload.entity.properties.appname) }}

deployFileName: $(deployFileName)

deployParametersFileName: $(deployParametersFileName)

deployTitle: ${{ lower(replace(replace(parameters.incomingport.action,'create_', ''),'_',' ')) }}

- script: |

echo '$(resourceName)'

displayName: 'Show Outputs'

- script: |

curl -X POST \

-H 'Content-Type: application/json' \

-H "Authorization: Bearer $(accessToken)" \

-d '{

"identifier": "$(resourceName)",

"title": "$(deployTitle)",

"properties": {"environment": "${{ parameters.incomingport.payload.properties.environment }}","iac": "Bicep","appname": "${{ coalesce(parameters.incomingport.payload.properties.appname, parameters.incomingport.payload.properties.name) }}"},

"relations": {"cloud_host": "${{ parameters.incomingport.payload.properties.location }}"}

}' \

"https://api.getport.io/v1/blueprints/${{ parameters.incomingport.context.blueprint }}/entities?upsert=true&run_id=$(runId)&create_missing_related_entities=true"

displayName: 'Upsert entity'

- template: templates/sendlogs.yml

parameters:

Message: $(deployMessage)

AccessToken: $(accessToken)

RunId: $(runId)

- template: templates/sendlogs.yml

parameters:

Message: "Deployment Finished"

AccessToken: $(accessToken)

RunId: $(runId)

- template: templates/sendStatus.yml

parameters:

Status: $(deployStatus)

Message: $(deployStatusMessage)

AccessToken: $(accessToken)

RunId: $(runId)

sendlogs.yml

parameters:

- name: Message

type: object

- name: RunId

type: string

- name: AccessToken

type: string

- name: conditionLevel

type: object

default: always()

values:

- always()

- succeeded()

- failed()

steps:

- bash: |

curl -X POST \

-H 'Content-Type: application/json' \

-H "Authorization: Bearer ${{ parameters.AccessToken }}" \

-d '{"message": "${{ parameters.Message }}"}' \

"https://api.getport.io/v1/actions/runs/${{ parameters.RunId }}/logs"

displayName: Send Logs

condition: and(${{ parameters.conditionLevel }}, ne('${{ parameters.Message }}', ''))

sendStatus.yml

parameters:

- name: Status

type: string

default: 'FAILURE'

- name: Message

type: string

default: "Azure Resource Creation Successful"

- name: RunId

type: string

- name: AccessToken

type: string

steps:

- bash: |

curl -X PATCH \

-H 'Content-Type: application/json' \

-H "Authorization: Bearer ${{ parameters.AccessToken }}" \

-d '{"status":"${{ parameters.Status }}", "message": {"run_status": "${{ parameters.Message }}"}}' \

"https://api.getport.io/v1/actions/runs/${{ parameters.RunId }}"

displayName: 'Send Status'

condition: always()

There is also a couple of required variables from Port that are needed by the pipeline, the port client id and port client secret

These values can be found in Port by selecting the … icon and then credentials.

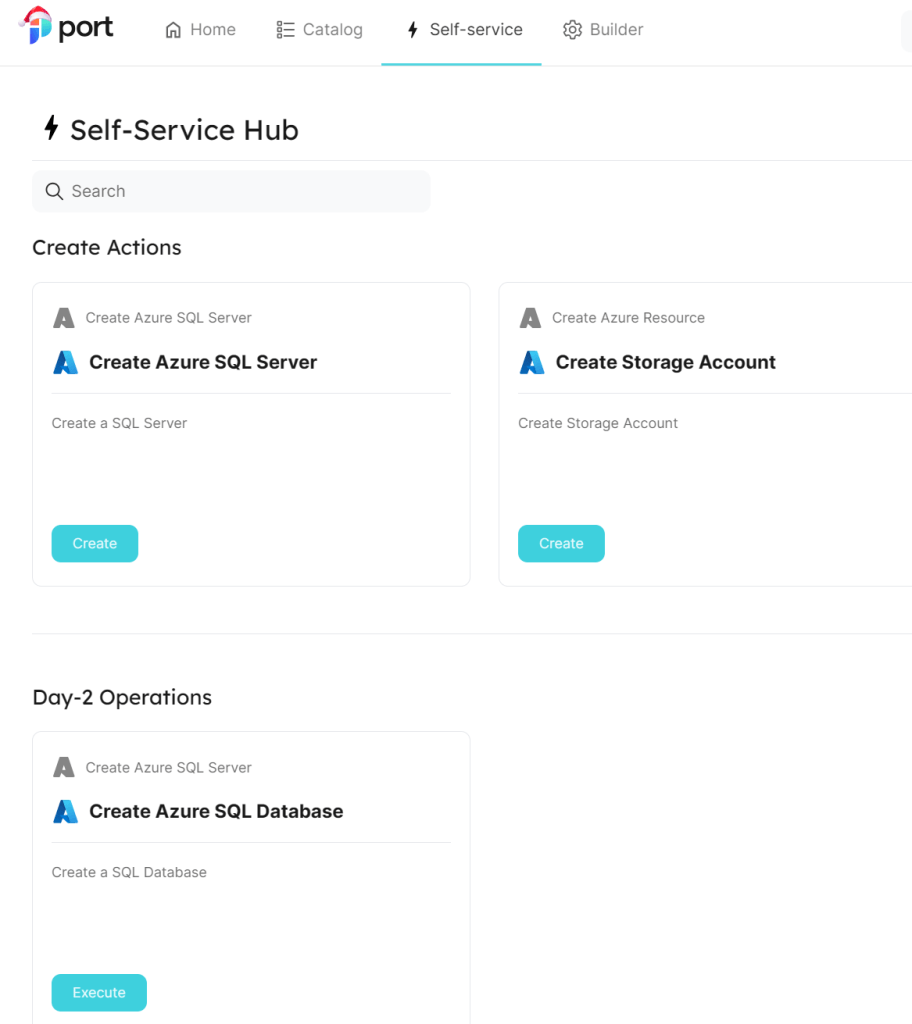

Self-Service

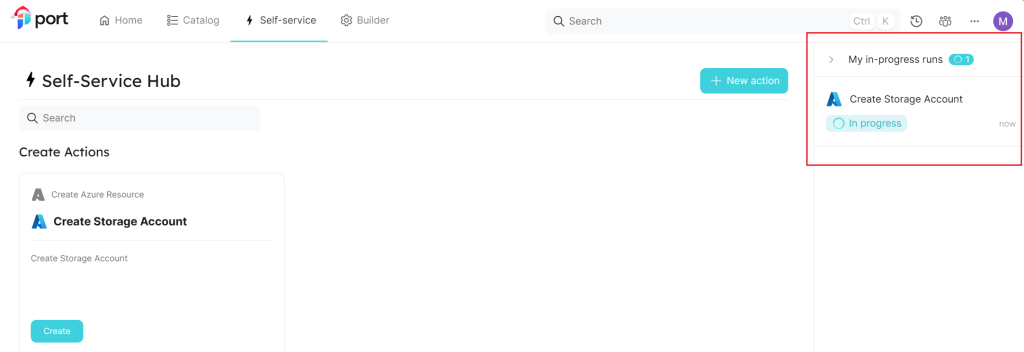

With the pipeline created we can now use the Self-Service Hub in Port to create our new resource.

Add some details and execute.

When execute has begun then there is a status on the right hand side.

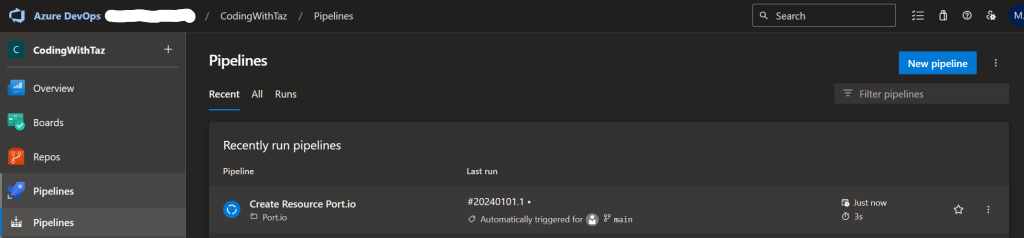

In Azure DevOps the webhook triggers the running pipeline.

When running the pipeline returns status information to Port and on completion updates the status.

And on the catalog screen there is now an entry for the storage account.

Additional Blueprints

The pipeline has been created to be generic and so should allow other types of resources to be created with accompanying Bicep configurations. The Create Azure Resource blueprint doesn’t seem to be the best place for resources that might need a Day-2 operation so, lets add another blueprint for SQL Server with a Day-2 operation to add a SQL Database into a built SQL Server.

Following the earlier example of creating blueprints and actions, first create a “Create Azure SQL Server” blueprint and then 2 actions “Create Azure SQL Server” using the Create type with user form of Environment, AppName and Location (as previously) and then “Create Azure SQL Database” using the Day-2 type with a single user form entry of Name.

This should then look something like this:

The Self-Service screen now includes the additional actions.

Trying to run the Day-2 operation at this point would not provide an entry for a SQL Server.

But once there is a SQL Server created this will allow it to be selected for the Day-2 operation and deploy a database.

Still not entirely sure how to get a display of the databases deployed in the catalog screen for the Day-2 operation but the run history shows the Create Azure SQL Database action and payload.

All the code shown here for the Bicep and Azure Pipelines can be found here in GitHub.

Final Thoughts

Before writing this article I had no prior experience of Port and there maybe different ways to achieve the above but after the initial “where does everything go” part it seemed a lot easier to see where effort is required to build something functional. You might think why use Bicep when you could import things using the supported integrations, mainly because I use a lot of Bicep and Pulumi and I wanted to see if Port was still an option even without direct support for those technologies and I think that it has merit and as something that is still evolving and improving it’s possible Bicep could be supported one day.

Exploring the Self-Service part of Port was the driving force for this article but there is so much more on offer to dive into and explorer. Port’s free tier supports 15 registered users and so it is a great place to get started and try it out without having to think about costs.

I really like the direction that Platform Engineering is taking and these types of tools are a game changer when it comes to reducing the cognitive load of deployment, infrastructure, etc.. from the Developers and allowing them to concentrate on the features they are delivering instead of how it gets where it needs to.

I hope this article has been interesting and directed you to take a look at Port for your own IDP needs, I am interested to see how Port evolves in the coming months/years.