Modern distributed systems generate a staggering amount of telemetry. Logs, metrics, and traces flow from dozens or hundreds of independently deployed services. Teams want deep visibility without drowning in operational overhead. They want consistency without slowing down delivery. And they want observability that scales with the system, not against it.

This is where Jaeger v2, OpenTelemetry, and GitOps converge into a clean, modern, future‑proof model.

This series walks through a complete, working setup that combines:

- Jaeger v2, built on the OpenTelemetry Collector

- OpenTelemetry auto‑instrumentation, with a focus on .NET

- ArgoCD, managing everything declaratively through GitOps

- A multi‑environment architecture, with dev/staging/prod deployed through ApplicationSets

Before we dive into YAML, pipelines, and instrumentation, it’s worth understanding why these technologies fit together so naturally and why they represent the future of platform‑level observability.

All manifests, ApplicationSets, and configuration used in this series are available in the companion GitHub repository

🧭 The Shift to Jaeger v2: Collector‑First Observability

Jaeger v1 was built around a bespoke architecture: agents, collectors, query services, and storage backends. It worked well for its time, but it wasn’t aligned with the industry’s move toward OpenTelemetry as the standard for telemetry data.

Jaeger v2 changes that.

What’s new in Jaeger v2

- Built on the OpenTelemetry Collector

- Accepts OTLP as the ingestion protocol

- Consolidates components into a simpler deployment

- Integrates Jaeger’s query and UI directly into the Collector

- Aligns with the OpenTelemetry ecosystem instead of maintaining parallel infrastructure

In practice, Jaeger v2 is no longer a standalone tracing pipeline.

It is a distribution of the OpenTelemetry Collector, with Jaeger’s query and UI components integrated into the same deployment.

This reduces operational complexity and brings Jaeger into the same ecosystem as metrics, logs, and traces, all flowing through the same Collector pipeline.

🌐 OpenTelemetry: The Universal Instrumentation Layer

OpenTelemetry has become the de facto standard for collecting telemetry across languages and platforms. Instead of maintaining language‑specific SDKs, exporters, and agents, teams can rely on a unified model:

- One protocol (OTLP)

- One collector pipeline

- One set of instrumentation libraries

- One ecosystem of processors, exporters, and extensions

For application teams, this means:

- Less vendor lock‑in

- Less custom instrumentation

- More consistency across services

For platform teams, it means:

- A single collector pipeline to operate

- A single place to apply sampling, filtering, and routing

- A consistent deployment model across environments

And with the OpenTelemetry Operator, you can enable auto‑instrumentation, especially for languages like .NET, without touching application code. The Operator injects the right environment variables, startup hooks, and exporters automatically.

🚀 Why GitOps (ArgoCD) Completes the Picture

Observability components are critical infrastructure. They need to be:

- Versioned

- Auditable

- Reproducible

- Consistent across environments

GitOps provides exactly that.

With ArgoCD:

- The Collector configuration lives in Git

- The Instrumentation settings live in Git

- The Jaeger UI and supporting components live in Git

- The applications live in Git

- The environment‑specific overrides live in Git

ArgoCD continuously ensures that the cluster matches what’s declared in the repository. If someone changes a Collector config manually, ArgoCD corrects it. If a deployment drifts, ArgoCD heals it. If you want to roll out a new sampling policy, you commit a change and let ArgoCD sync it.

Git becomes the single source of truth for your entire observability stack.

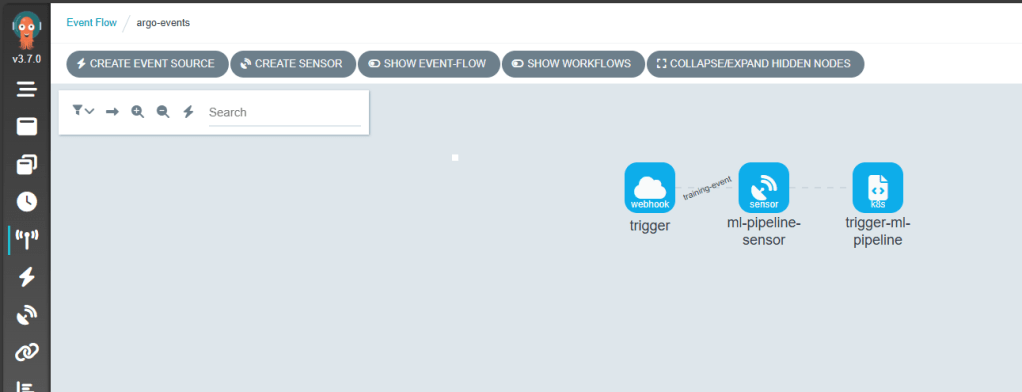

🏗️ How These Pieces Fit Together

Here’s the high‑level architecture this series will build:

- OpenTelemetry Collector (Jaeger v2)

- Receives OTLP traffic

- Processes and exports traces

- Hosts the Jaeger v2 query and UI components

- Applications

- Auto‑instrumented using OpenTelemetry agents

- Emit traces to the Collector via OTLP

- ArgoCD

- Watches the Git repository

- Applies Collector, Instrumentation, and app manifests

- Uses ApplicationSets to generate per‑environment deployments

- Enforces ordering with sync waves

- Ensures everything stays in sync

This architecture is intentionally simple. It’s designed to be:

- Easy to deploy

- Easy to understand

- Easy to extend into production patterns

- Easy to scale across environments and clusters

🎯 What You’ll Learn in This Series

Over the next four parts, we’ll walk through:

Part 2 – Deploying Jaeger v2 with the OpenTelemetry Collector

A working Collector configuration, including receivers, processors, exporters, and the Jaeger UI.

Part 3 – Auto‑instrumenting .NET with OpenTelemetry

How to enable tracing in a .NET application without modifying code, using the OpenTelemetry .NET auto‑instrumentation agent.

Part 4 – Managing Everything with ArgoCD (GitOps)

How to structure your repo, define ArgoCD Applications, and sync the entire observability stack declaratively.

Part 5 – Troubleshooting, Scaling, and Production Hardening

Sampling strategies, storage backends, multi‑cluster patterns, and common pitfalls.

🧩 Why This Matters

Observability is no longer optional. It’s foundational. But the tooling landscape has been fragmented for years. Jaeger v2, OpenTelemetry, and GitOps represent a convergence toward:

- Standardisation

- Operational simplicity

- Developer autonomy

- Platform consistency

This series is designed to give you a practical, reproducible path to adopting that model, starting with the simplest working setup and building toward production‑ready patterns.

You can find the full configuration for this part — including the Collector manifests and Argo CD setup — in the GitHub repository